Navigating AI Integration: Privacy Challenges in Legal Practice

Introduction:

The integration of Artificial Intelligence (AI) in the legal field is revolutionizing the way legal practitioners operate. By leveraging AI technologies, lawyers can automate workflows, enhance decision-making, and ultimately provide a better client experience. However, alongside these advancements comes a host of privacy-related challenges that legal professionals must carefully navigate. This article delves into these complexities, outlining the role of AI in legal practice, the existing privacy frameworks, and the specific challenges that arise during integration.

Introduction to AI Integration in Legal Practice

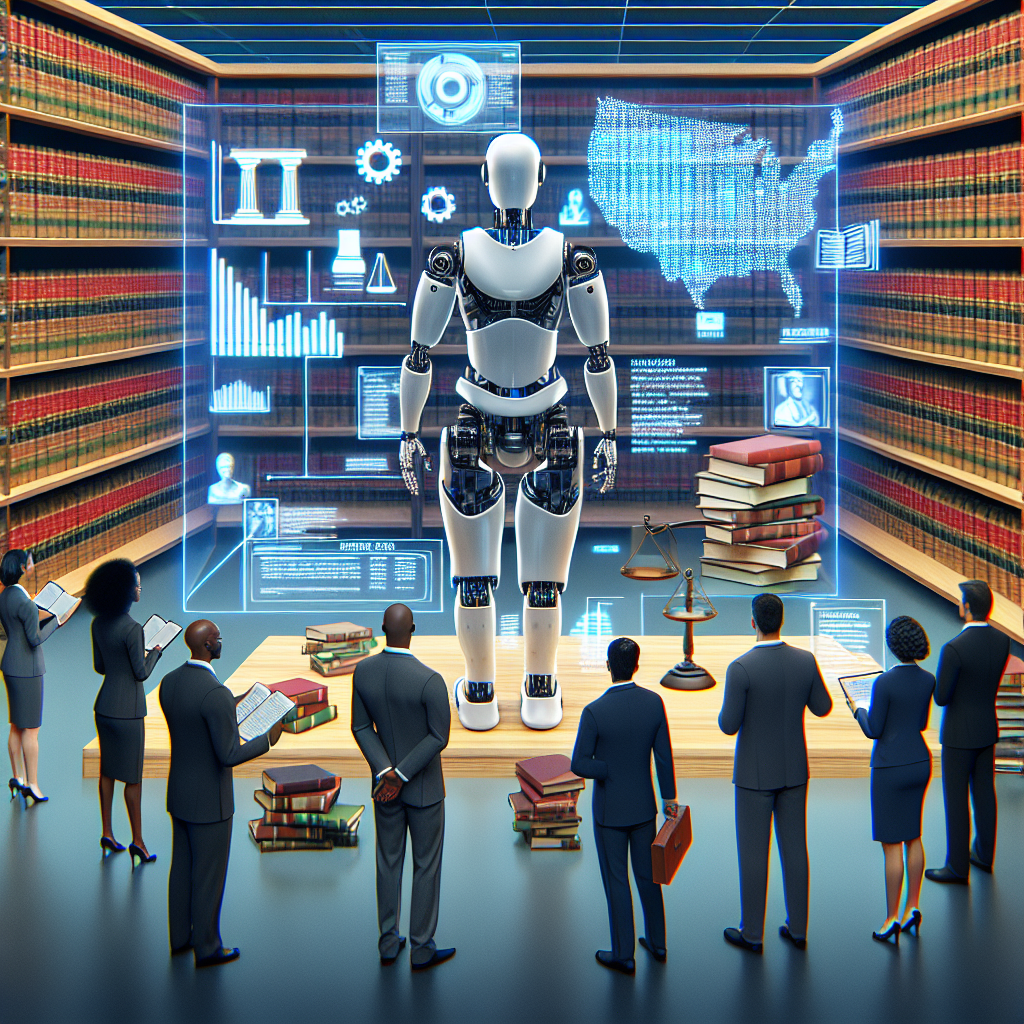

The application of AI in legal practice has been rapidly evolving, driven by an increased demand for efficiency and accuracy in legal services. Legal professionals are utilizing AI for various functions, including document analysis and legal research, which dramatically reduce the time spent on traditionally labor-intensive tasks. Furthermore, the proliferation of AI is starkly reshaping operational models within firms, prompting a re-evaluation of privacy measures. As such, understanding the implications of AI from a privacy perspective is crucial for contemporary legal practitioners.

AI’s influence in the legal sector is multifaceted. For instance, AI technology is employed in document review, where natural language processing allows machines to comprehend and categorize legal documents swiftly. Similarly, legal research has been enhanced through AI, enabling lawyers to sift through extensive data sets to find pertinent case laws and precedents with unprecedented speed. Despite these benefits, the reliance on data-driven solutions poses significant privacy concerns that necessitate due diligence.

Ultimately, while AI integration offers promising advancements to legal practice, it also creates a complex landscape of privacy challenges. The anticipation of better operational efficiencies must be matched with robust privacy frameworks, ensuring that client confidentiality is never compromised in the name of progress.

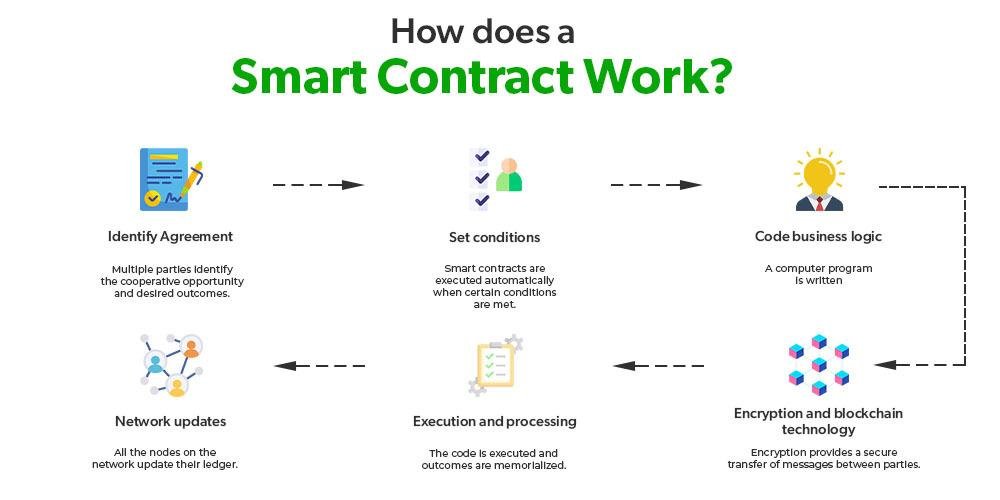

The Role of AI in Legal Practice

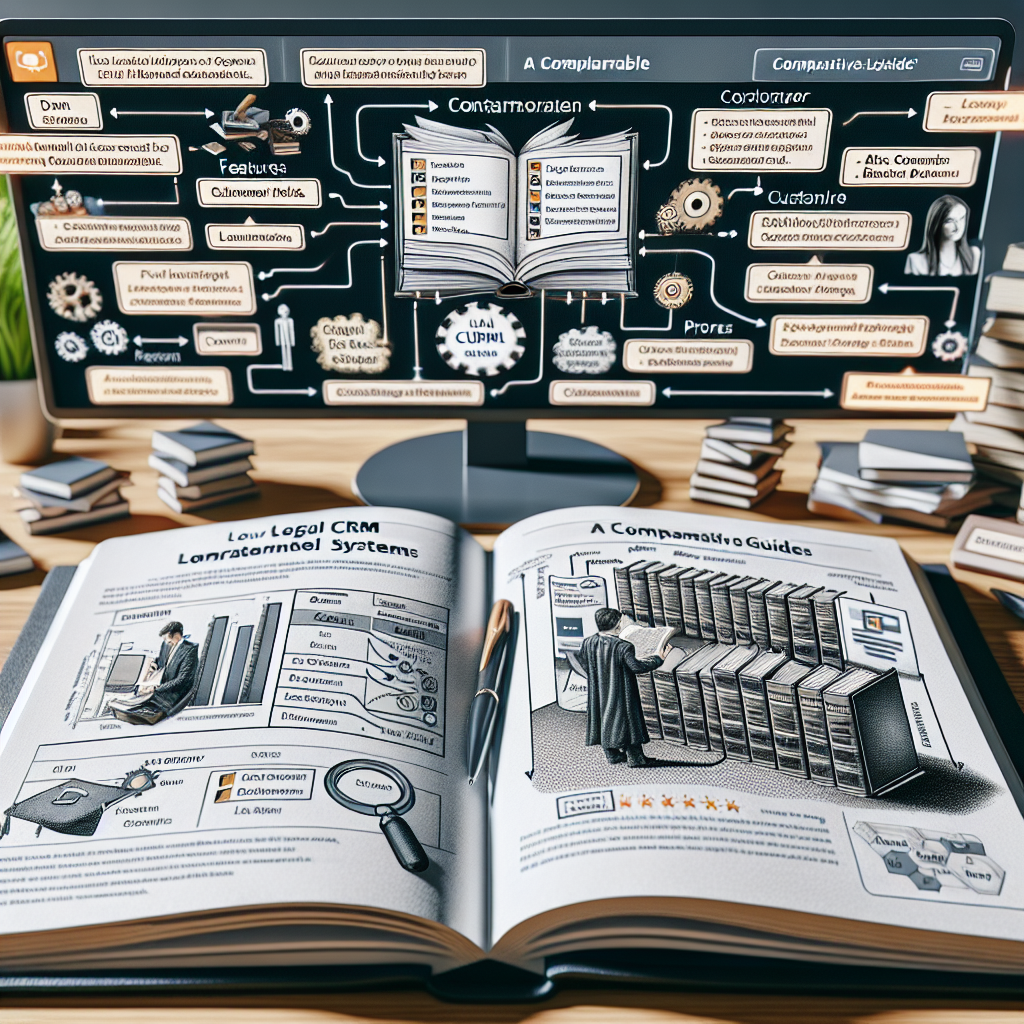

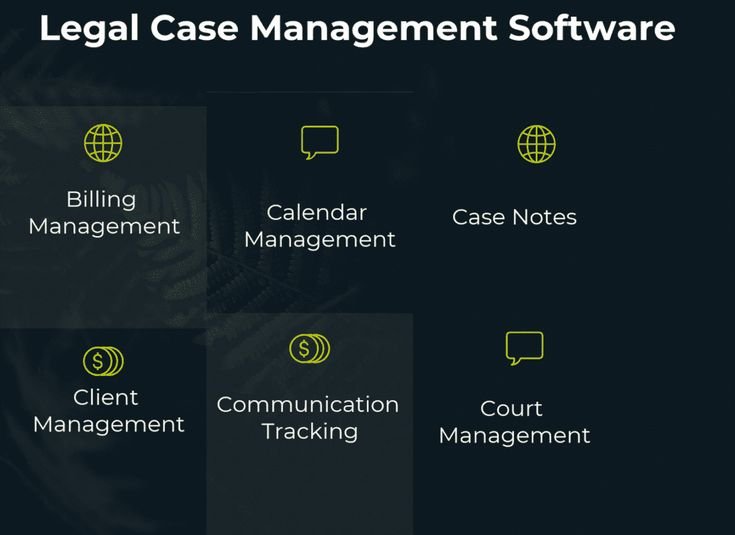

AI technology has found multiple applications in legal practice, significantly transforming workflows. One primary area of impact is document review. AI-powered platforms can analyze extensive legal documents rapidly and accurately, which minimizes the time and costs associated with manual review processes. For example, firms employing AI document review tools can manage large-scale litigation efficiently, ensuring that vital documents are identified and prioritized without exhaustive manual labor.

Legal research, too, has benefited from AI. Machine learning algorithms curate and analyze vast repositories of legal texts, allowing lawyers to obtain relevant precedents and case laws quickly. This has been particularly evident in firms handling high volumes of litigation, where the speed of obtaining data can influence the outcome of cases. AI-driven research tools are designed to deliver contextually relevant search results that enhance legal teams’ decision-making and strategic planning processes.

Predictive analytics is another facet of AI’s role in legal practice, empowering attorneys to assess potential case outcomes based on historical data. By analyzing trends and past rulings, lawyers can better formulate case strategies and advice clients on possible outcomes. Furthermore, AI-driven contract analysis tools facilitate the identification of compliance risks and ensure adequate management, streamlining the workload for legal teams engaged in contract negotiations. However, despite these significant efficiencies, legal practitioners must remain vigilant regarding the privacy challenges inherent in utilizing technology fueled by sensitive data.

You May Also Like: AI Innovations Shaping Litigation Strategies in Legal Tech

Understanding the Privacy Framework in Legal Contexts

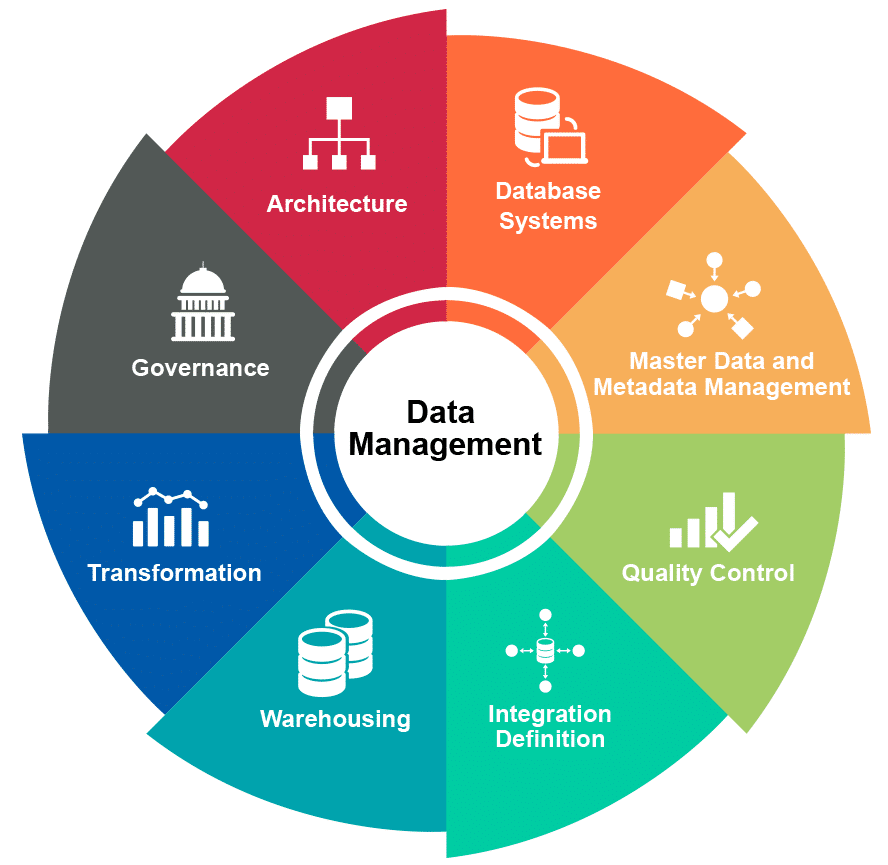

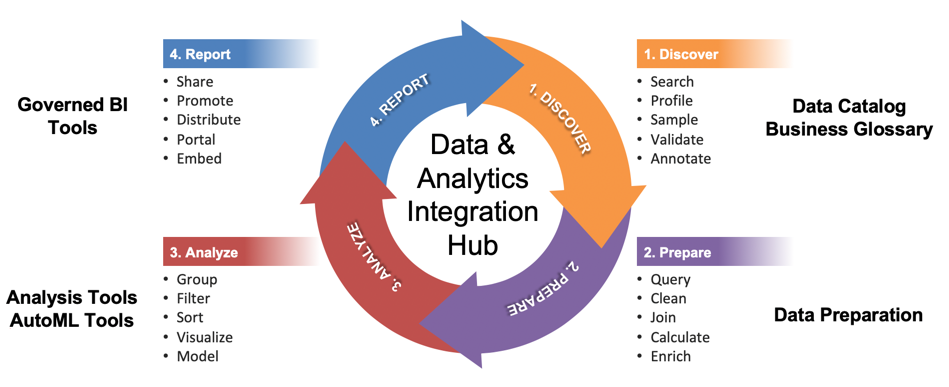

The legal profession is subject to a intricate web of ethical guidelines and privacy regulations that dictate the management of sensitive data. With the integration of AI, legal practitioners must navigate existing privacy frameworks to ensure compliance and protect client information effectively. Central to these frameworks are regulations such as the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA), which establish stringent data protection requirements.

The GDPR, in particular, poses unique challenges for legal practices that handle the data of European Union citizens. Under this regulation, organizations must ensure that data collection practices are transparent and that clients are aware of how their information will be used. Additionally, any AI application in legal practice must incorporate mechanisms to facilitate clients’ rights concerning their personal data, including access, correction, and deletion provisions. Non-compliance with GDPR can lead to substantial fines, making it crucial for legal practitioners to integrate robust data governance frameworks while implementing AI tools.

For firms handling healthcare-related matters, HIPAA imposes strict privacy and security requirements that must be adhered to during AI integration. This is particularly relevant for legal technology solutions that process Protected Health Information (PHI). Legal practitioners must ensure that AI applications provide adequate safeguards to protect sensitive healthcare data, maintain confidentiality, and prevent unauthorized access. Overall, understanding the privacy framework surrounding legal practice is essential for managing AI integration successfully while safeguarding client trust.

Key Regulations Impacting Legal Practice

Beyond GDPR and HIPAA, several other regulations and principles impact the privacy landscape in legal practice. One pivotal principle is the attorney-client privilege, which serves as a cornerstone of the legal profession. Legal professionals must ensure that AI systems processing sensitive communications follow strict confidentiality guidelines, preventing unauthorized access or potential breaches. The sanctity of attorney-client privilege must be maintained at all costs, as any violation could jeopardize not only client relationships but also the legal integrity of the practice.

Moreover, state-level regulations, such as the California Consumer Privacy Act (CCPA), further complicate the privacy landscape for legal practitioners. The CCPA grants California residents rights concerning their personal data, including the right to know what information is being collected and the right to request deletion. Legal practitioners operating within California must ensure compliance with this legislation while integrating any AI technologies, ultimately demonstrating transparency and accountability to clients.

The diversity and complexity of privacy regulations necessitate that legal practitioners conduct thorough assessments when implementing AI solutions. This includes evaluating how data is collected, processed, and stored, with an emphasis on implementing stringent data protection measures. By maintaining transparency with clients regarding data usage and adhering to legal obligations, law firms can successfully navigate the privacy challenges posed by AI integration while building and maintaining client trust.

Identifying Specific Privacy Challenges Associated with AI

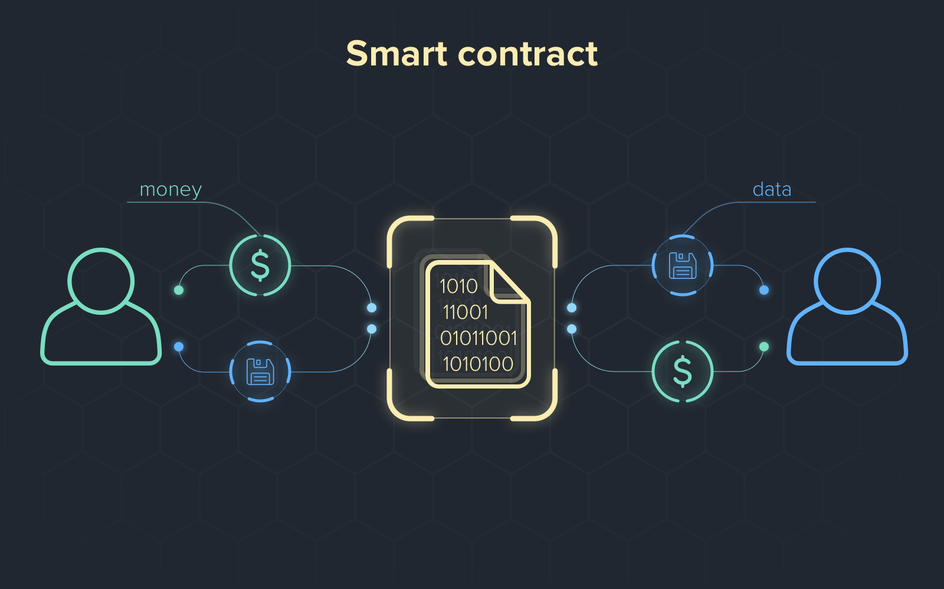

The integration of AI into legal practice introduces specific privacy challenges that warrant careful consideration by legal professionals. One prominent concern is the risk of inadvertent data exposure during AI processing or analysis. Due to the vast amounts of data being analyzed by AI systems, there is a potential for sensitive client information to be exposed unintentionally, either through a flawed processing algorithm or inadequate security measures. Legal practitioners must ensure that AI tools are implemented with strong data protection practices to mitigate this risk.

Additionally, the potential for unintentional bias in AI algorithms presents significant ethical concerns. If AI systems are trained using biased datasets, they can perpetuate discrimination or lead to skewed outcomes affecting clients. For legal practitioners, this risk could undermine the fairness of legal processes and ultimately compromise their ethical responsibilities. To counteract this challenge, firms must conduct rigorous assessments of the datasets used to train AI systems and prioritize strategies for identifying and addressing bias.

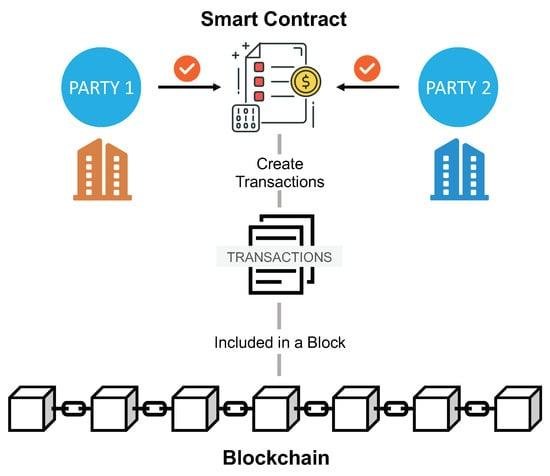

One more challenge is the ambiguity surrounding data ownership. As legal practices increasingly rely on cloud-based AI platforms, questions regarding data ownership and the rights of access can complicate matters. Legal professionals must clarify ownership rights when utilizing third-party AI services, particularly regarding who retains control over client data and the legal implications of data processing by external entities. Effective contract negotiation and vendor assessments are vital to ensuring that client privacy remains intact and that legal rights are preserved throughout the data processing lifecycle.

Practical Challenges

In addition to these specific privacy challenges, the implementation of AI technologies in legal practice can lead to practical hurdles associated with vendor compliance. Many law firms turn to third-party AI service providers for solutions; however, it is essential to ensure that these vendors maintain compliance with relevant privacy regulations and ethical standards. The responsibility to protect client data ultimately lies with the firm, which requires diligent vetting of third-party vendors to confirm their data protection practices align with legal obligations.

Furthermore, the deployment of AI tools often necessitates updated training for legal practitioners. As AI systems continuously evolve, law firms must invest in educating their teams about how to use these tools effectively and ethically. Legal practitioners must understand the technological implications of AI, the privacy risks involved, and how to navigate these complexities when serving clients. Training sessions should address issues such as data handling practices, compliance with regulations, and recognizing biased outcomes in AI analysis.

Lastly, maintaining client trust throughout the AI integration process requires ongoing communication and transparency regarding data usage and protection. Legal firms must demonstrate their commitment to safeguarding client data through clear policies, consistent engagement, and an unambiguous stance on privacy measures taken during AI implementation. Establishing robust communication channels with clients helps reassure them that privacy remains a fundamental priority amidst the technological transformation in legal practice.

Conclusion:

Navigating the integration of AI into legal practice brings about transformative possibilities while presenting significant privacy challenges. Legal practitioners must maintain an acute awareness of privacy frameworks, regulatory implications, and the unique risks associated with the technology to ensure compliance while protecting client trust. By addressing privacy concerns proactively—through thorough vetting of AI tools, ensuring robust vendor compliance, and fostering a culture of education and transparency—legal professionals can harness AI’s potential while upholding the integrity of the legal profession.

FAQs About Navigating AI Integration: Privacy Challenges in Legal Practice

- What privacy regulations should legal firms be aware of when integrating AI technologies?

Legal firms must navigate several privacy regulations, including the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA), which impose strict data protection requirements. Additionally, firms should be aware of state laws like the California Consumer Privacy Act (CCPA) and adhere to ethical principles, like attorney-client privilege, to protect sensitive client information. - How can legal practitioners mitigate the risk of inadvertent data exposure during AI processing?

Legal practitioners can mitigate data exposure risks by implementing stringent security measures, such as data encryption, access controls, and rigorous vendor assessments. Moreover, it is essential for firms to evaluate and test AI tools thoroughly to identify potential vulnerabilities before deployment. - What steps can legal firms take to address the risk of bias in AI algorithms?

To counter the risk of unintentional bias in AI, legal firms should conduct extensive evaluations of the datasets used for training AI systems. This includes identifying potentially biased data and ensuring that diverse and relevant datasets are utilized. Regular audits of AI outputs for fairness and alignment with ethical standards can further minimize bias. - What role does client communication play in AI integration within legal practice?

Ongoing communication with clients is critical during AI integration. Legal firms should maintain transparency about how client data will be used and the privacy measures in place. This builds trust and reassures clients that their sensitive information is being handled securely, particularly in an era of increasing technology reliance. - Why is vendor compliance important in the context of AI integration?

Vendor compliance is important because third-party AI service providers handle sensitive client data. Legal firms must ensure that these vendors uphold the same privacy and security standards to mitigate risks associated with data processing. Failure to ensure compliance can result in liability for the firm, as they remain responsible for protecting client information throughout the process.