Bias in AI: The Unseen Threat in Legal Tech?

Bias in AI: The Unseen Threat in Legal Tech?

As the legal industry continues to embrace the transformative power of technology through the integration of Artificial Intelligence (AI) in Legal Tech, the issue of bias in AI algorithms has emerged as a critical concern. Despite its potential to streamline legal processes and improve efficiency, the presence of inherent biases within AI systems poses significant challenges to the principles of fairness and justice within the legal domain. This article delves into the complexities of bias in AI, exploring its impact on legal decision-making, the root causes of bias, and the imperative need for ethical frameworks to mitigate the unseen threat of bias in Legal Tech.

The Scope of Bias in Legal Tech

Bias in AI within the context of Legal Tech extends across various dimensions, including:

- Algorithmic biases in predictive analytics

- Prejudicial outcomes in automated decision-making processes

- Discriminatory patterns in data-driven legal research

- Implicit biases in natural language processing and sentiment analysis

These forms of bias can perpetuate systemic injustices, exacerbate disparities, and undermine the integrity of legal proceedings, leading to detrimental consequences for individuals and communities affected by biased legal outcomes.

The Impact of AI Bias on Legal Decision-Making

AI bias has the potential to significantly influence legal decision-making, leading to skewed judgments, unfair sentencing, and compromised access to justice. Biased algorithms can perpetuate discriminatory practices, reinforce societal inequalities, and amplify the marginalization of vulnerable populations, thereby eroding trust in the legal system and impeding the pursuit of equitable and unbiased legal outcomes.

Identifying the Root Causes of AI Bias

The root causes of AI bias are multifaceted and often stem from various sources, including:

- Data discrepancies and imbalanced data representation

- Algorithmic design flaws and lack of diversity in development teams

- Unintentional reinforcement of societal prejudices and stereotypes

- Inadequate testing and validation procedures for AI models

Addressing these root causes is essential to prevent the perpetuation of bias within AI systems and ensure the ethical deployment of AI technologies in the legal domain.

Challenges in Mitigating Bias in Legal Tech

Mitigating bias in Legal Tech poses several challenges that require proactive intervention and strategic measures, including:

- Developing comprehensive data collection and analysis protocols

- Promoting diversity and inclusion in AI development teams

- Implementing stringent testing and validation standards for AI algorithms

- Establishing transparent and explainable AI decision-making processes

Overcoming these challenges is imperative to safeguard the principles of fairness, equality, and justice within the legal system and ensure that AI technologies uphold ethical and unbiased standards of operation.

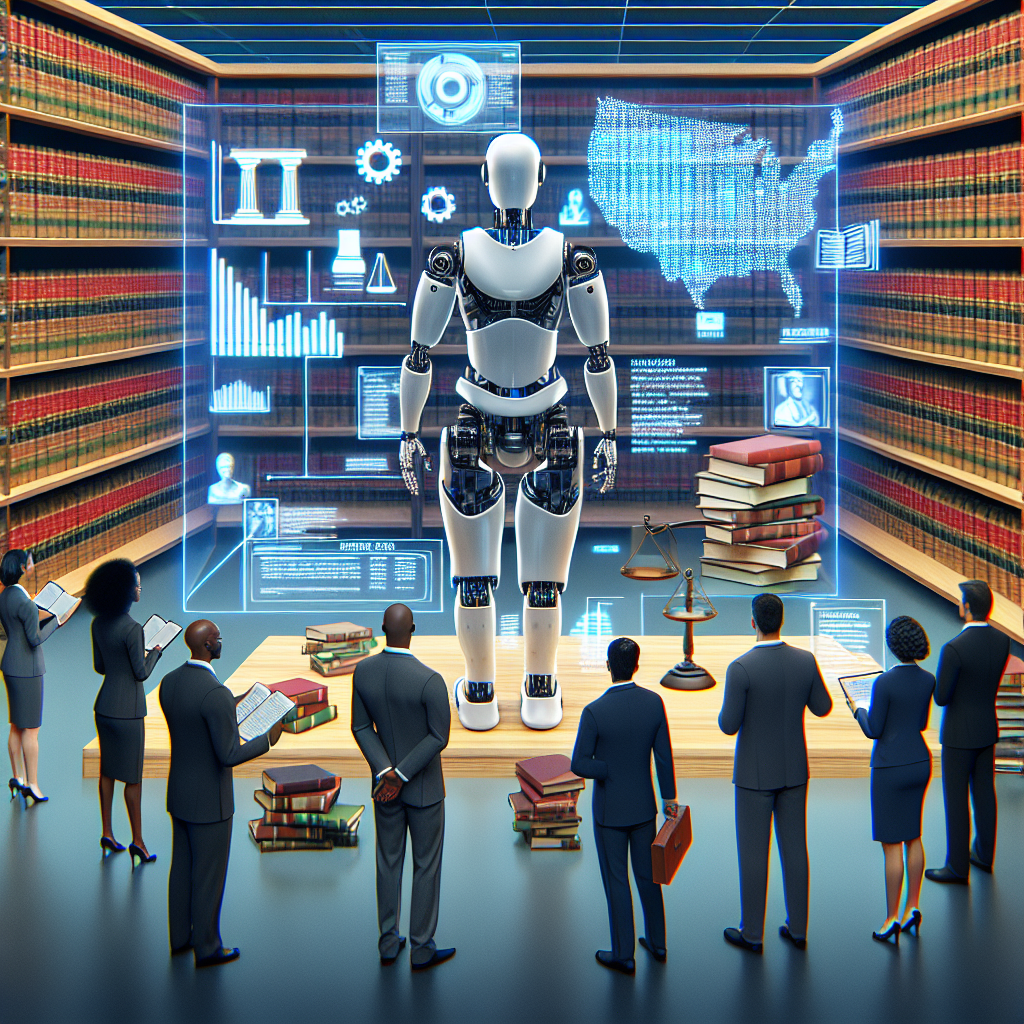

The Role of Ethical Frameworks in AI Governance

Ethical frameworks play a crucial role in governing the deployment of AI in Legal Tech, guiding the development and implementation of AI technologies in alignment with ethical principles and legal standards. Ethical frameworks should encompass:

- Guidelines for responsible data collection and utilization

- Protocols for addressing algorithmic biases and ensuring transparency

- Mechanisms for continuous monitoring and auditing of AI systems

- Training modules for legal professionals to recognize and mitigate AI bias

By adhering to ethical frameworks, legal practitioners and technology developers can collectively foster a culture of ethical awareness and accountability in the integration of AI within the legal domain.

Conclusion

Bias in AI represents a significant challenge that demands immediate attention and concerted efforts from legal professionals, technology experts, and regulatory bodies. By acknowledging the potential threats of bias in Legal Tech, adopting proactive measures to identify and address biases, and adhering to comprehensive ethical frameworks, the legal industry can harness the full potential of AI technologies while upholding the fundamental principles of fairness, equity, and justice within the legal system.

FAQs (Frequently Asked Questions)

1. What is AI bias in Legal Tech?

AI bias in Legal Tech refers to the presence of discriminatory patterns and prejudicial outcomes within AI algorithms, leading to skewed legal decision-making processes and perpetuating systemic injustices.

2. How does AI bias impact legal decision-making?

AI bias can influence legal decision-making by reinforcing discriminatory practices, exacerbating disparities, and compromising access to justice, thereby undermining the integrity and fairness of legal proceedings.

3. What are the root causes of AI bias in Legal Tech?

The root causes include data discrepancies, algorithmic design flaws, societal prejudices, and inadequate testing procedures, all of which contribute to the perpetuation of biases within AI systems.

4. Why is it challenging to mitigate bias in Legal Tech?

Mitigating bias is challenging due to data complexities, lack of diversity in development teams, stringent testing requirements, and the need for transparent AI decision-making processes, all of which demand comprehensive strategies and interventions.

5. How can legal professionals contribute to the mitigation of AI bias?

Legal professionals can contribute by advocating for ethical AI governance, promoting diversity in AI development teams, and participating in training programs that raise awareness about AI bias and its implications within the legal domain.

6. What role do ethical frameworks play in AI governance?

Ethical frameworks guide the development and deployment of AI technologies, ensuring ethical compliance, transparency, and accountability in the integration of AI within the legal domain