Navigating Privacy Challenges in AI for Legal Practices

Introduction to Privacy Challenges in AI

Artificial Intelligence (AI) is increasingly being integrated into legal practices, providing tools for document review, legal research, and predictive analytics. While these technologies have the potential to enhance efficiency and accuracy, they also present unique privacy challenges that legal professionals must navigate. Understanding these challenges is critical for ensuring compliance with legal standards and maintaining client trust.

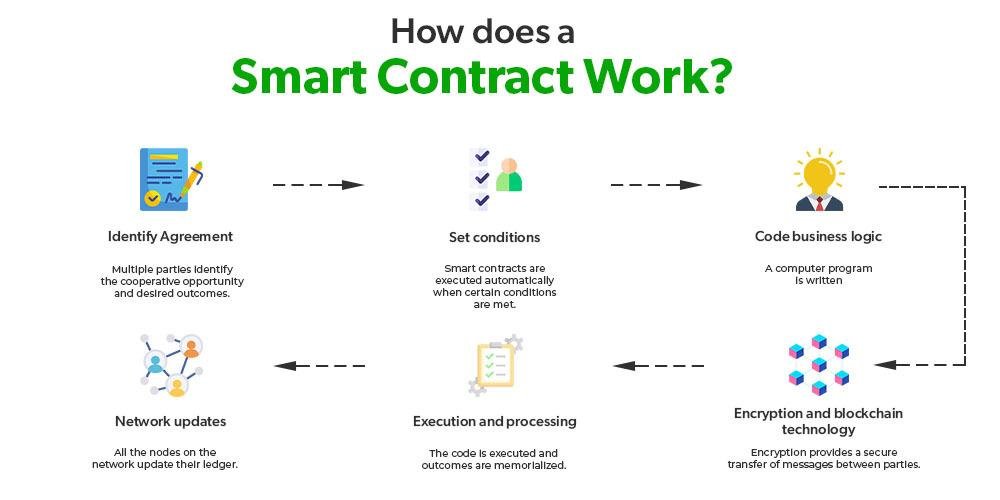

AI-driven technologies, such as Natural Language Processing (NLP), machine learning (ML) algorithms, and predictive analytics, have changed the landscape of legal practices. NLP enables computers to understand and process human language, allowing legal professionals to quickly analyze large volumes of texts. Similarly, ML algorithms can learn from data patterns, assisting in tasks like predicting case outcomes or identifying relevant precedents. However, the reliance on such technologies raises essential questions about client confidentiality and data integrity.

Moreover, legal standards and regulations impact the deployment of AI in legal practices. Key regulations such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) provide frameworks for managing personal data. Understanding these legal standards is vital for law firms utilizing AI, as non-compliance could lead to significant legal repercussions, including heavy fines and loss of reputation. Thus, comprehending the regulatory landscape is a foundational step in addressing the privacy challenges posed by AI.

Key Privacy Challenges in AI Implementation

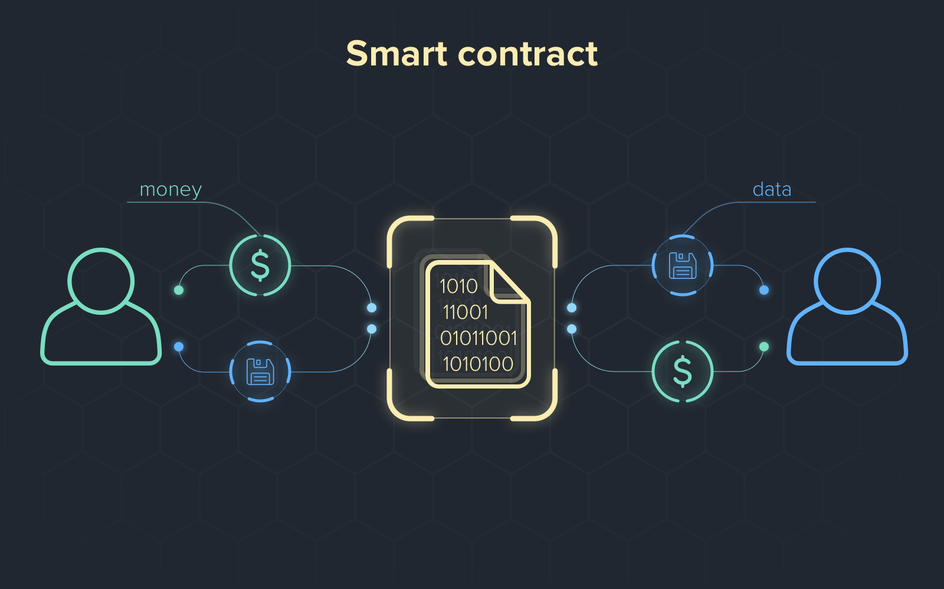

The deployment of AI in legal practices introduces various privacy-related challenges that organizations must address. The integration of machine learning and data-driven algorithms often requires vast amounts of personal data, increasing vulnerability to data breaches and unauthorized access. Legal practices that fail to implement robust security measures may find themselves facing significant liabilities, given the sensitive nature of the information handled, including client names, case details, and financial information.

Informed consent and transparency present further challenges for legal professionals. Obtaining informed consent for the utilization of client data in AI systems is crucial but often complex. Clients may not fully understand how their data is being used or the implications of its use in AI training. Therefore, legal practices must ensure clear communication about AI processes and the handling of client data to maintain trust and comply with legal standards.

Lastly, bias and fairness are critical considerations when implementing AI in legal practices. As AI systems rely on historical data for training, there exists the risk of perpetuating existing biases, which could lead to unfair outcomes in legal decision-making. Mechanisms for bias detection and correction, such as algorithm audits and diverse training data, must be established to mitigate these concerns, ensuring equitable treatment for all clients.

Read More: The Future of AI in Legal Practice: Trends and Predictions

Compliance with Privacy Regulations

Legal practices that utilize AI must adhere to a range of privacy regulations governing data protection. Non-compliance can lead to serious legal repercussions and permanent damage to the practice’s reputation. Understanding key regulations, such as the GDPR and the CCPA, is essential in navigating the complexities of data privacy. For example, under the GDPR, practices must implement measures to ensure data subjects’ rights, including the right to access their data and the right to rectify inaccuracies.

Consequences of non-compliance can be severe, ranging from significant fines to reputational harm. For instance, the GDPR imposes penalties of up to €20 million or 4% of a firm’s annual global turnover, whichever is higher. Such financial repercussions may cripple smaller firms or diminish the credibility of larger practices. Therefore, compliance with privacy regulations is not merely a legal obligation but a critical component of risk management.

Best practices for compliance should be rooted in a framework of ongoing evaluation and proactive measures. Regular audits of AI systems for data usage can ensure that legal practices remain within the boundaries set by applicable laws. Additionally, integrating the principle of privacy by design in AI development processes will create a culture of compliance. Training staff on data protection and compliance requirements can further reinforce the importance of privacy in every aspect of legal operations.

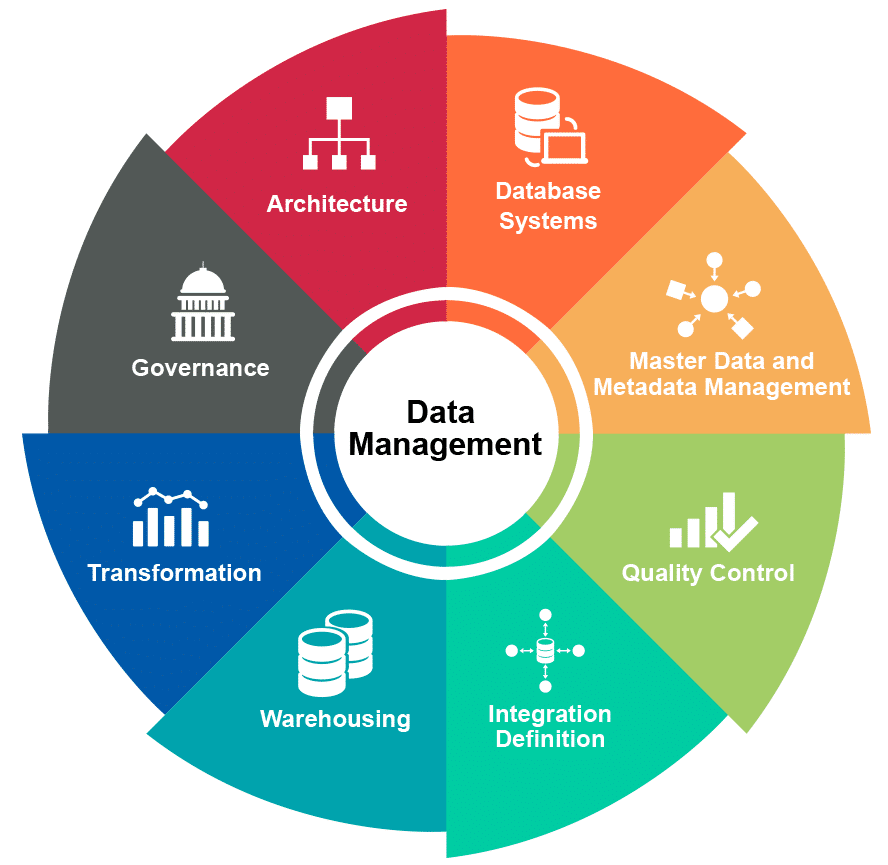

Implementing Best Practices for Data Management

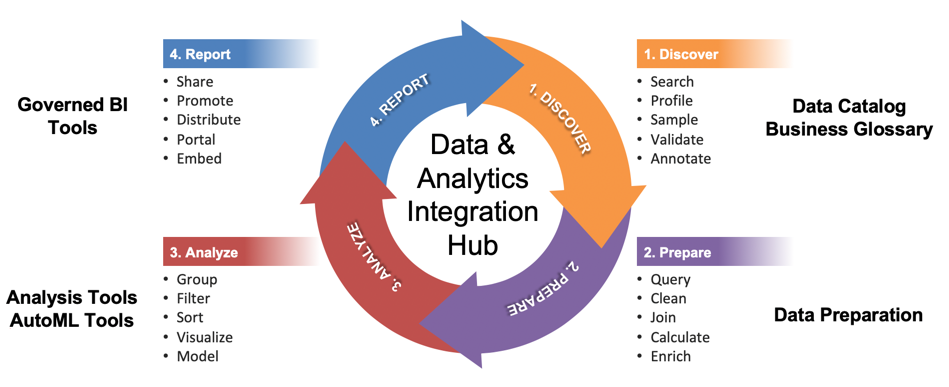

Effective data management strategies are vital for addressing privacy challenges in AI. Law firms must establish processes that ensure the ethical and lawful use of client information. One key strategy is data minimization, which involves collecting and retaining only the essential data necessary for the intended legal purposes. Regular audits and reviews should be conducted to identify and delete non-essential data, thus reducing the risk of unauthorized access.

Additionally, developing clear data usage policies is essential for guiding legal professionals on best practices regarding client data. These policies should define how data is collected, stored, and used, along with clear guidelines for accessing sensitive information. Training staff on these policies not only ensures compliance but also fosters a culture of accountability surrounding client privacy.

Lastly, embracing technology solutions, such as advanced encryption tools and secure storage options, can significantly mitigate data security risks. Employing these technologies guards against unauthorized access and potential breaches, further enhancing a law firm’s ability to protect client information. By prioritizing data management and security, legal practices can establish themselves as trustworthy and conscientious stewards of sensitive client data.

Conclusion

Navigating the privacy challenges associated with AI in legal practices is a critical endeavor that requires diligence, understanding, and proactive measures. By comprehensively addressing data security concerns, informed consent, and compliance with regulations, legal practitioners can harness the benefits of AI while upholding client trust and ensuring ethical standards. Implementing best practices for data management will result in a more secure and efficient practice that is better prepared to face the evolving landscape of technology and privacy.

FAQs

1. What are the main privacy concerns associated with AI in legal practices?

The primary concerns include data security, informed consent, and biased outcomes. Legal practices must ensure sensitive data is securely stored and processed, obtain clear consent for data usage, and mitigate potential biases in AI decision-making.

2. How do privacy laws like GDPR and CCPA affect AI in legal practices?

These regulations impose strict requirements on data handling practices, including obtaining informed consent, data minimization, and enabling clients to access and control their personal data. Non-compliance can lead to severe penalties.

3. What steps can legal practitioners take to comply with privacy regulations?

Firms can conduct regular audits of their AI systems, implement privacy by design principles during AI development, and train staff on compliance requirements related to personal data handling.

4. How can law firms manage data effectively when using AI technologies?

Firms should adopt strategies like data minimization, develop clear data use policies, and leverage secure storage and encryption technologies to ensure responsible data management.

5. What role does transparency play in AI usage within legal practices?

Transparency is essential for maintaining client trust and ensuring compliance with legal standards. Legal practices must communicate clearly how AI systems use client data and implement frameworks for obtaining informed consent.