Navigating Regulatory Compliance in AI-Driven Legal Tech

Introduction:

The legal industry is undergoing a profound transformation due to the rise of Artificial Intelligence (AI) technologies, leading to a paradigm shift in how legal professionals operate. AI-driven legal tech offers a suite of tools designed to streamline operational workflows, increase efficiency, and empower decision-making processes within law firms. These advancements range from intelligent document review systems to predictive legal analytics, all of which leverage Natural Language Processing (NLP), Machine Learning (ML), and advanced data analytics. However, the burgeoning presence of AI in the legal domain necessitates a thorough understanding of regulatory compliance imperatives to ensure ethical practices and maintain client trust in a technology-dependent landscape. This article explores the regulatory framework surrounding AI in legal tech, the challenges faced in compliance, and best practices for navigating this complex environment.

Introduction to AI in Legal Tech

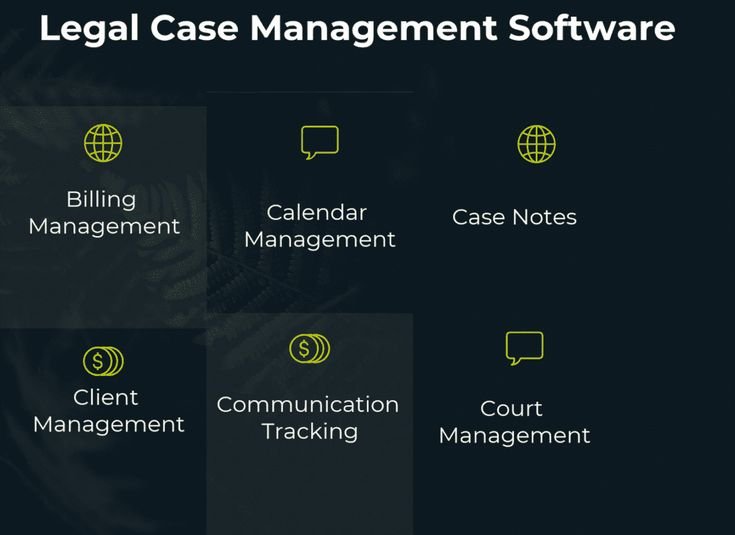

The integration of AI into legal tech has revolutionized the manner in which legal services are delivered. By automating repetitive tasks such as legal research, contract analysis, and due diligence, AI tools enable law firms to allocate their human resources to more strategic initiatives, thus enhancing overall productivity. Furthermore, AI has the capacity to analyze vast datasets at speeds unachievable by human attorneys, offering valuable insights that can drive informed decision-making. As legal tech continues to evolve, so too do the regulatory considerations that accompany these technological innovations. The importance of adhering to legal and ethical standards cannot be overstated, as non-compliance can lead to reputational damage, legal repercussions, and erosion of client trust.

The dynamic nature of both legal technology and the regulatory landscape presents unique challenges for entities involved in AI-driven legal services. For instance, firms dealing with personal data must ensure compliance with various privacy regulations. Moreover, as AI algorithms learn from historical data, they risk perpetuating existing biases or introducing new ones. Balancing innovation with regulatory adherence is crucial for law firms seeking to harness AI effectively while safeguarding their clients’ interests. Understanding the regulatory framework governing AI technologies in legal contexts is the first step toward ensuring compliance and maintaining the integrity of legal services.

In light of these challenges, the legal profession must prioritize regulatory compliance not only to avoid potential penalties but also to build a foundation of trust with clients. Fostering an environment of transparency, accountability, and ethical responsibility ensures that clients feel confident in the capabilities and decisions made by AI-driven legal solutions. Therefore, a thorough exploration of regulatory frameworks and compliance challenges is essential for legal tech stakeholders aiming to successfully navigate the intricacies of this transformative landscape.

Understanding Regulatory Frameworks

Navigating the regulatory landscape requires an awareness of the various frameworks that govern AI technologies. One of the most influential legal structures in this regard is the General Data Protection Regulation (GDPR), which applies to organizations operating within the European Union. The GDPR emphasizes data protection and privacy, mandating that entities obtain explicit consent before processing personal data and ensuring robust security measures are in place. Legal tech firms must integrate GDPR principles into their AI systems, particularly when handling sensitive information that could compromise client privacy. Failure to comply can result in hefty fines and loss of reputation, underscoring the necessity of understanding these regulatory requirements.

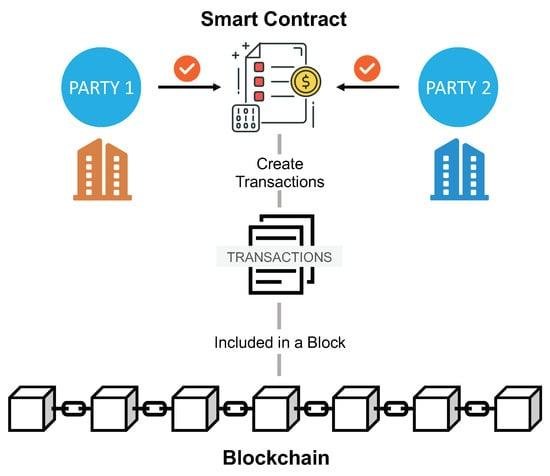

In the United States, the compliance landscape is equally complex, particularly with laws like the Health Insurance Portability and Accountability Act (HIPAA) and the Fair Credit Reporting Act (FCRA). HIPAA presents stringent standards for the protection of medical information, impacting legal professionals working in healthcare-related cases. Legal tech developers must evaluate how their AI applications handle health data to ensure compliance with HIPAA. Likewise, the FCRA governs how consumer information is collected and utilized, especially concerning AI applications in risk assessment for credit reporting. Violations of these laws can lead to severe penalties and legal challenges, making it imperative for legal tech firms to remain vigilant and informed.

Moreover, organizations must stay abreast of emerging regulations that aim to address the unique challenges posed by AI technologies. This includes frameworks for ethical AI development, accountability measures, and guidelines for data use. By proactively engaging with these regulations and adapting their practices accordingly, legal tech companies can navigate compliance challenges more effectively, ultimately enhancing their credibility and establishing themselves as responsible players within the legal technology arena.

Read Also: Ensuring Data Compliance in Cloud Storage for Legal Professionals

Challenges of Compliance in AI-Driven Solutions

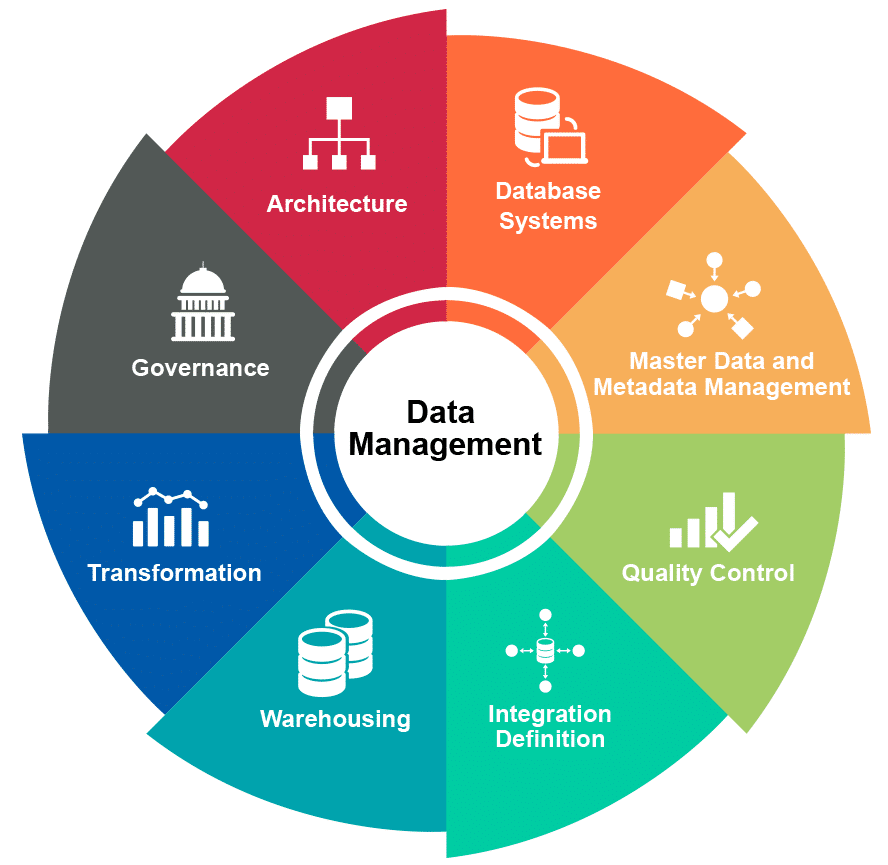

Legal tech firms face a multitude of compliance challenges as they strive to balance innovation with regulatory obligations. One of the foremost challenges is ensuring data privacy and security—an increasingly critical concern as the volume of sensitive information handled by legal tech solutions continues to grow. Compliance with regulations like GDPR and HIPAA requires robust data governance frameworks, data encryption, and incident response protocols to protect client data against breaches. However, as AI systems constantly learn from new inputs, maintaining compliance can become complex, necessitating continuous monitoring and adaptation of data practices.

Another significant challenge lies in addressing algorithmic bias, which can occur when AI systems are trained on biased datasets or inadequately designed algorithms. In the legal sector, biased AI could lead to inequitable outcomes, undermining the principles of justice and fairness. Legal tech companies must take proactive steps to identify and mitigate bias in their AI applications, ensuring that their solutions promote fairness and transparency. This requires a commitment to ethical AI practices, which might include employing diverse data sets, conducting audits to identify potential biases, and implementing fairness-checking algorithms.

Finally, accountability and liability pose considerable challenges in an AI-driven legal environment. As AI systems increasingly influence decision-making processes, determining responsibility for errors or adverse outcomes becomes a convoluted issue. Establishing clear accountability frameworks and delineating liability standards are critical steps in building trust between legal tech firms and their clients. By proactively addressing these challenges, legal tech companies can demonstrate their commitment to ethical and compliant practices while fostering confidence in their AI-driven solutions.

Best Practices for Compliance

To effectively mitigate compliance risks, legal tech firms should adopt a proactive stance on regulatory adherence by implementing a series of best practices. One essential strategy is conducting regular audits of data management practices to assess compliance with regulatory requirements. These audits should encompass data collection methods, security protocols, and data processing workflows to ensure alignment with existing regulations such as GDPR, HIPAA, and FCRA. By regularly reviewing practices, firms can identify gaps and areas for improvement while fostering a culture of accountability and ethical responsibility.

Another best practice involves investing in training and awareness programs for all team members about the importance of regulatory compliance. Legal professionals and technology developers alike must understand the implications of regulatory frameworks on their work. Training sessions might cover topics such as ethical AI development, data security best practices, and awareness of potential biases in AI algorithms. By fostering a culture of learning and vigilance, firms can equip their employees to navigate the complexities of regulatory compliance more effectively.

Lastly, collaborating with regulatory bodies and stakeholders can provide valuable insights into best practices for compliance in the legal tech space. Establishing partnerships with legal associations, industry groups, and governmental organizations can facilitate knowledge sharing and foster collaborations aimed at tackling compliance challenges collectively. Such partnerships enable legal tech firms to remain informed about emerging regulations, seek guidance on best practices, and contribute to the development of industry standards that elevate compliance efforts across the sector.

Conclusion:

As AI technologies continue to reshape the legal landscape, navigating regulatory compliance emerges as a paramount concern for legal tech firms. By thoroughly understanding the regulatory frameworks that govern AI applications and actively addressing the challenges associated with compliance, organizations can foster trust, ensure ethical practices, and mitigate potential legal repercussions. Embracing best practices, such as conducting regular audits, training employees, and collaborating with stakeholders, will position legal tech companies for success in an increasingly complex environment. In doing so, they can harness the transformative power of AI while delivering secure, trustworthy, and ethically sound legal services to their clients.

FAQs

1. What are some key regulations that govern AI in legal tech?

Key regulations include the General Data Protection Regulation (GDPR), which emphasizes personal data protection in the EU; the Health Insurance Portability and Accountability Act (HIPAA), protecting medical information in the U.S.; and the Fair Credit Reporting Act (FCRA), which governs the collection of consumer information for credit reporting.

2. How can legal tech firms ensure compliance with data privacy laws?

Legal tech firms can ensure compliance by implementing robust data governance frameworks, conducting regular audits, training employees on data protection principles, and utilizing encryption and secure data handling protocols to protect sensitive information.

3. What risks are associated with algorithmic bias in AI-driven legal solutions?

Algorithmic bias poses risks such as inequitable treatment of clients, potential legal ramifications, and damage to a firm’s reputation. It is essential for firms to identify, mitigate, and monitor biases in AI systems to ensure fairness and transparency in legal outcomes.

4. What best practices should legal tech firms adopt for regulatory compliance?

Best practices include conducting regular compliance audits, providing training programs for staff, and fostering collaborations with regulatory bodies and industry stakeholders to stay informed about regulations and share insights about compliance.

5. How can legal tech firms build trust with their clients?

Legal tech firms can build client trust by prioritizing regulatory compliance, ensuring data security, implementing transparent AI practices, and fostering a culture of accountability and ethical responsibility throughout their organization.