The Regulatory Future of AI in the Legal Sector

Table of Contents

Introduction

The regulatory future of AI in the legal sector is poised to transform the landscape of legal practice, addressing both the opportunities and challenges presented by advanced technologies. As artificial intelligence continues to evolve, its integration into legal services raises critical questions about ethics, accountability, and compliance. Regulatory frameworks are being developed to ensure that AI applications in law uphold standards of fairness, transparency, and data protection. This evolving regulatory environment aims to balance innovation with the need for consumer protection and professional integrity, ultimately shaping how legal professionals leverage AI tools to enhance efficiency, improve access to justice, and maintain the rule of law. As stakeholders navigate this complex terrain, the future of AI regulation in the legal sector will be pivotal in defining the role of technology in legal practice and its impact on society.

Emerging Regulations Impacting AI in Legal Practice

As artificial intelligence continues to permeate various sectors, the legal industry is no exception. The integration of AI technologies into legal practice has prompted a growing recognition of the need for regulatory frameworks that address the unique challenges and ethical considerations associated with these advancements. Emerging regulations are beginning to shape the landscape of AI in legal practice, reflecting a concerted effort to balance innovation with accountability.

One of the primary concerns driving the development of regulations is the potential for bias in AI algorithms. Legal professionals rely on data-driven insights to inform their decisions, yet if the underlying data is flawed or biased, the outcomes can perpetuate existing inequalities. Consequently, regulators are focusing on establishing standards for transparency and fairness in AI systems. This includes mandates for law firms to disclose the data sources used in training AI models, as well as requirements for regular audits to assess the performance and fairness of these systems. By implementing such measures, regulators aim to foster trust in AI technologies while ensuring that they do not inadvertently reinforce systemic biases.

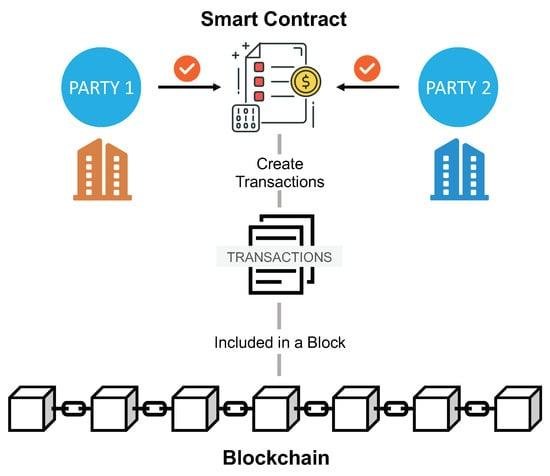

In addition to addressing bias, emerging regulations are also concerned with data privacy and security. The legal sector handles sensitive information, and the use of AI tools raises significant questions about how this data is managed and protected. As jurisdictions around the world adopt stricter data protection laws, such as the General Data Protection Regulation (GDPR) in Europe, legal practitioners must navigate a complex web of compliance requirements. This has led to calls for the development of specific guidelines that govern the use of AI in legal contexts, ensuring that client confidentiality is maintained and that data is processed in a manner that aligns with legal standards.

Moreover, the issue of accountability in AI decision-making is gaining traction among regulators. As AI systems become more autonomous, determining liability in cases of error or malpractice becomes increasingly complex. Legal professionals are advocating for clear frameworks that delineate the responsibilities of AI developers, law firms, and individual practitioners. This includes discussions around the need for “explainable AI,” which refers to the ability of AI systems to provide understandable justifications for their decisions. By promoting transparency in AI operations, regulators can help ensure that legal practitioners can effectively interpret and challenge AI-generated outcomes.

Furthermore, the rapid pace of technological advancement necessitates a dynamic regulatory approach. As AI tools evolve, so too must the regulations that govern their use. This has led to the establishment of collaborative initiatives between legal professionals, technologists, and regulators aimed at creating adaptive regulatory frameworks. These partnerships are essential for fostering an environment where innovation can thrive while still adhering to ethical and legal standards. By engaging in ongoing dialogue, stakeholders can identify emerging risks and develop proactive strategies to mitigate them.

In conclusion, the regulatory future of AI in the legal sector is being shaped by a confluence of factors, including concerns about bias, data privacy, accountability, and the need for adaptive frameworks. As these regulations continue to emerge, legal practitioners must remain vigilant and proactive in their approach to integrating AI technologies into their practices. By embracing these regulatory developments, the legal sector can harness the potential of AI while ensuring that it operates within a framework that prioritizes fairness, transparency, and ethical responsibility. Ultimately, the successful navigation of this regulatory landscape will determine the extent to which AI can enhance legal practice without compromising the foundational principles of justice and equity.

Ethical Considerations for AI Use in Law

As artificial intelligence continues to permeate various sectors, its integration into the legal field raises significant ethical considerations that must be addressed to ensure responsible use. The legal profession, traditionally grounded in principles of justice, fairness, and accountability, faces unique challenges as it navigates the complexities introduced by AI technologies. One of the foremost ethical concerns is the potential for bias in AI algorithms. These systems often rely on historical data to make predictions or recommendations, which can inadvertently perpetuate existing biases present in that data. For instance, if an AI tool is trained on past legal cases that reflect systemic biases, it may produce outcomes that disproportionately affect certain demographic groups. Therefore, it is imperative for legal practitioners to critically assess the data sets used in AI training and to implement measures that mitigate bias, ensuring that AI applications promote equity rather than exacerbate disparities.

Moreover, the transparency of AI systems poses another ethical dilemma. Legal professionals must grapple with the “black box” nature of many AI algorithms, which can obscure the rationale behind their outputs. This lack of transparency can hinder a lawyer’s ability to explain decisions to clients or to challenge AI-generated recommendations in court. Consequently, fostering a culture of transparency is essential, where AI systems are designed to provide clear explanations for their conclusions. This not only enhances trust in AI tools but also aligns with the legal profession’s commitment to due process and informed decision-making.

In addition to bias and transparency, the issue of accountability emerges as a critical ethical consideration. When AI systems are involved in legal decision-making, it becomes challenging to determine who is responsible for errors or adverse outcomes. If an AI tool misinterprets a legal precedent or provides flawed advice, the question arises: is the responsibility borne by the software developers, the legal practitioners who relied on the tool, or the firm that implemented it? Establishing clear lines of accountability is vital to ensure that ethical standards are upheld and that clients receive competent representation. Legal professionals must advocate for frameworks that delineate responsibility in the context of AI use, thereby safeguarding the integrity of the legal process.

Furthermore, the implications of AI on client confidentiality and data security cannot be overlooked. The legal sector is bound by strict confidentiality obligations, and the integration of AI tools raises concerns about how sensitive client information is handled. Legal practitioners must ensure that AI systems comply with data protection regulations and that robust security measures are in place to prevent unauthorized access or data breaches. This responsibility extends to understanding the mechanisms by which AI tools process and store data, as well as ensuring that clients are informed about how their information is utilized.

As the legal sector continues to embrace AI technologies, ongoing education and training for legal professionals become paramount. Lawyers must be equipped not only with the technical skills to use AI tools effectively but also with a strong ethical framework to guide their application. This dual focus on technical proficiency and ethical awareness will empower legal practitioners to harness the benefits of AI while upholding the core values of the profession.

In conclusion, the ethical considerations surrounding AI use in the legal sector are multifaceted and require careful deliberation. By addressing issues of bias, transparency, accountability, and data security, legal professionals can navigate the complexities of AI integration responsibly. As the regulatory landscape evolves, it is essential for the legal community to engage in proactive discussions about these ethical challenges, ensuring that the future of AI in law aligns with the principles of justice and integrity that underpin the profession.

The Role of Government in AI Oversight for Legal Services

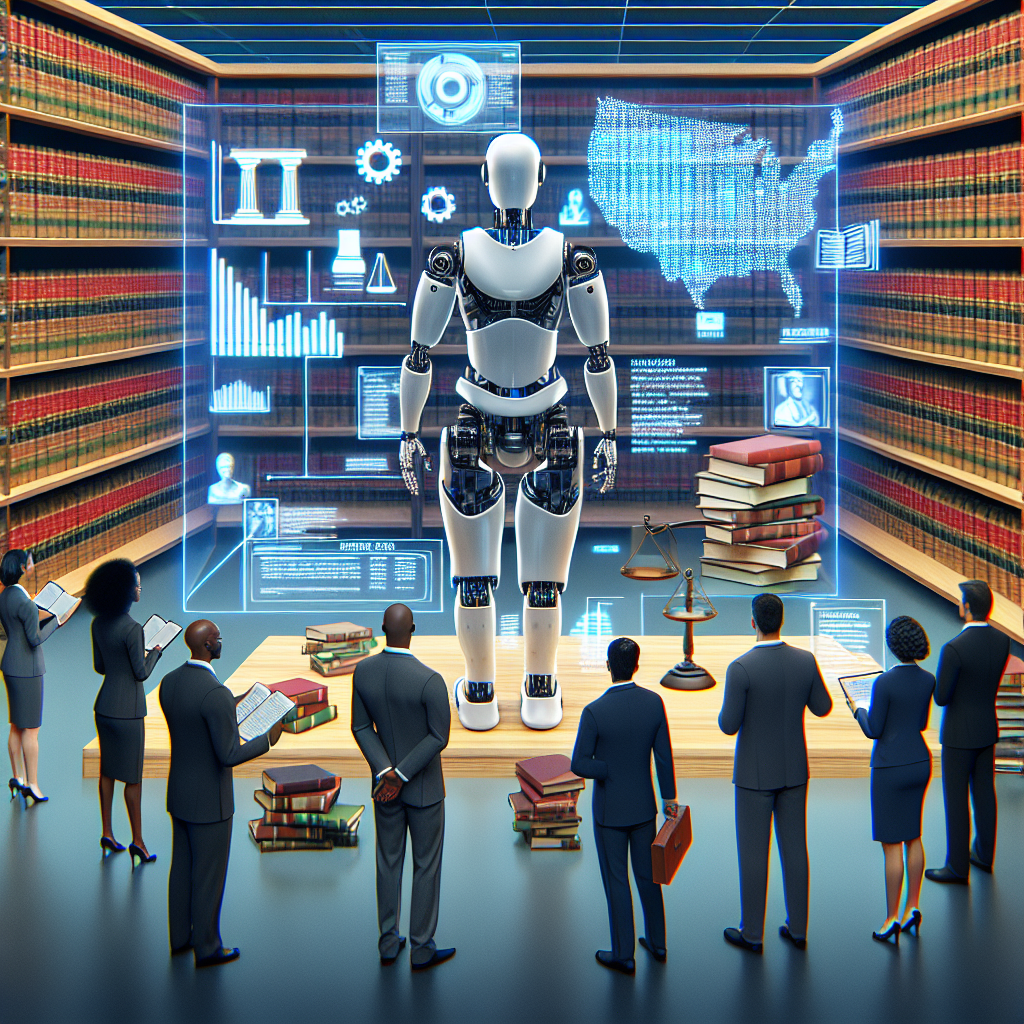

As artificial intelligence continues to permeate various sectors, its integration into the legal field raises significant questions regarding oversight and regulation. The role of government in AI oversight for legal services is becoming increasingly critical, as the potential for both innovation and ethical dilemmas grows. Governments worldwide are beginning to recognize the necessity of establishing frameworks that not only foster technological advancement but also ensure the protection of fundamental rights and the integrity of the legal system.

To begin with, the government’s involvement in AI regulation is essential for maintaining public trust in legal services. As AI systems are deployed for tasks such as legal research, contract analysis, and even predictive analytics for case outcomes, the potential for bias and error becomes a pressing concern. For instance, if an AI tool is trained on historical legal data that reflects systemic biases, it may inadvertently perpetuate these biases in its recommendations. Therefore, government oversight is crucial in setting standards for transparency and accountability in AI algorithms. By mandating that AI systems used in legal contexts undergo rigorous testing and validation, governments can help ensure that these technologies operate fairly and justly.

Moreover, the government has a pivotal role in establishing ethical guidelines for the use of AI in legal services. As AI tools become more sophisticated, the line between human judgment and machine decision-making blurs. This raises ethical questions about the extent to which AI should be allowed to influence legal outcomes. For example, should an AI system be permitted to make recommendations on sentencing or bail decisions? To address such dilemmas, governments must engage with legal professionals, ethicists, and technologists to develop comprehensive guidelines that delineate acceptable uses of AI in the legal domain. This collaborative approach not only enhances the legitimacy of the regulations but also ensures that diverse perspectives are considered.

In addition to ethical considerations, the government must also focus on the implications of data privacy and security in the context of AI in legal services. Legal professionals handle sensitive information, and the integration of AI systems raises concerns about data breaches and unauthorized access. Consequently, governments need to implement robust data protection regulations that safeguard client confidentiality while allowing for the responsible use of AI technologies. This may involve establishing clear protocols for data handling, storage, and sharing, as well as ensuring that AI systems comply with existing privacy laws.

Furthermore, as AI technologies evolve, so too must the regulatory frameworks that govern them. Governments should adopt a proactive stance, continuously monitoring advancements in AI and adapting regulations accordingly. This dynamic approach will enable regulators to address emerging challenges and opportunities in real-time, rather than relying on outdated policies that may stifle innovation. By fostering an environment that encourages experimentation and adaptation, governments can help the legal sector harness the full potential of AI while mitigating associated risks.

In conclusion, the role of government in AI oversight for legal services is multifaceted and essential for ensuring that the integration of these technologies is both ethical and effective. By establishing standards for transparency, developing ethical guidelines, safeguarding data privacy, and adopting a proactive regulatory approach, governments can help shape a future where AI enhances the legal profession without compromising its core values. As the legal landscape continues to evolve, the collaboration between government, legal practitioners, and technologists will be vital in navigating the complexities of AI in the legal sector.

Future Trends in AI Compliance and Legal Standards

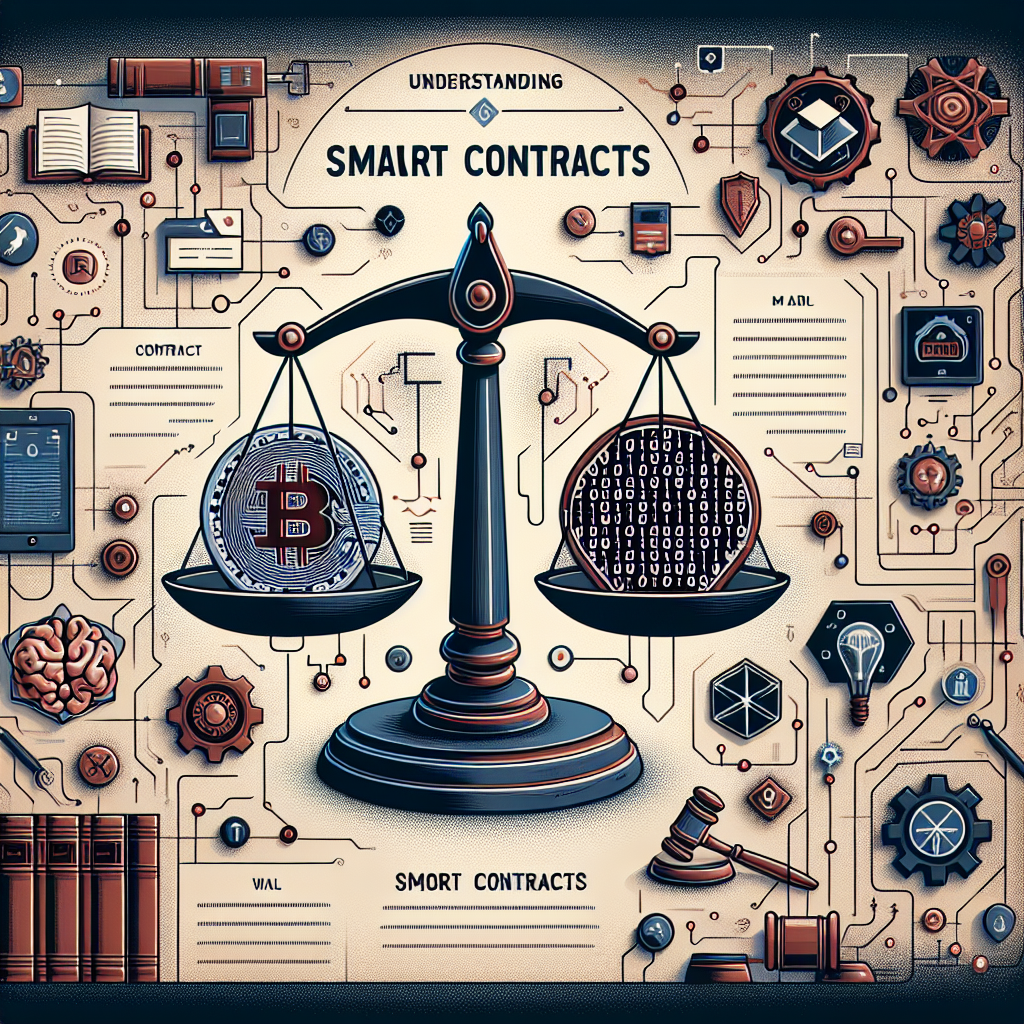

As artificial intelligence continues to permeate various sectors, the legal industry stands at a pivotal juncture, grappling with the implications of AI technologies on compliance and legal standards. The future of AI in the legal sector is not merely about the adoption of advanced tools; it is also about navigating the complex landscape of regulatory frameworks that will inevitably evolve to address the unique challenges posed by these technologies. As we look ahead, several trends are emerging that will shape the compliance landscape for AI applications in legal practice.

One of the most significant trends is the increasing emphasis on transparency and accountability in AI systems. Legal professionals are becoming acutely aware that the algorithms driving AI tools must be interpretable and explainable. This is particularly crucial in contexts where AI is used for decision-making, such as predictive analytics in litigation or contract review. As a result, regulatory bodies are likely to mandate that firms provide clear documentation of how AI systems operate, including the data sources used and the decision-making processes involved. This push for transparency will not only enhance trust among clients but also mitigate the risks associated with opaque AI systems that could inadvertently perpetuate biases or lead to erroneous conclusions.

Moreover, the integration of ethical considerations into AI compliance frameworks is gaining traction. Legal practitioners are increasingly recognizing that the deployment of AI must align with ethical standards that govern the profession. This alignment will likely lead to the establishment of guidelines that dictate the ethical use of AI, ensuring that technologies are employed in ways that uphold the principles of justice and fairness. As a result, law firms may find themselves developing internal policies that not only comply with regulatory requirements but also reflect their commitment to ethical practices. This dual focus on compliance and ethics will be essential in fostering a culture of responsibility within the legal sector.

In addition to transparency and ethics, the future of AI compliance will also be influenced by the need for robust data protection measures. With the rise of AI comes the increased risk of data breaches and misuse of sensitive information. Consequently, regulatory frameworks are expected to tighten around data privacy laws, compelling legal firms to adopt stringent data governance practices. This will involve not only ensuring compliance with existing regulations, such as the General Data Protection Regulation (GDPR) but also proactively implementing measures to safeguard client data in AI applications. As firms navigate these complexities, they will need to invest in training and resources to ensure that their staff are well-versed in data protection principles.

Furthermore, as AI technologies evolve, so too will the legal standards governing their use. The rapid pace of innovation in AI means that regulatory bodies will need to remain agile, adapting existing laws and creating new ones to address emerging challenges. This dynamic environment will require legal professionals to stay informed about regulatory changes and to engage in ongoing dialogue with policymakers. By participating in these discussions, legal practitioners can help shape the regulatory landscape, ensuring that it is both effective and conducive to innovation.

In conclusion, the future of AI compliance and legal standards in the legal sector is poised for transformation. As transparency, ethics, and data protection become central tenets of AI governance, legal professionals will need to adapt their practices accordingly. By embracing these trends, the legal industry can not only enhance its compliance posture but also position itself as a leader in the responsible use of AI technologies. Ultimately, the successful integration of AI into legal practice will depend on a collaborative approach that prioritizes ethical considerations and regulatory adherence, paving the way for a more accountable and innovative legal landscape.

Conclusion

The regulatory future of AI in the legal sector is likely to focus on ensuring ethical use, transparency, and accountability while balancing innovation and efficiency. As AI technologies continue to evolve, regulators will need to establish frameworks that address issues such as data privacy, bias, and the unauthorized practice of law. Collaboration between legal professionals, technologists, and regulators will be essential to create guidelines that foster trust and protect the rights of individuals while enabling the legal sector to leverage AI’s potential for improved service delivery and access to justice. Ultimately, a proactive and adaptive regulatory approach will be crucial in navigating the complexities of AI integration in the legal field.